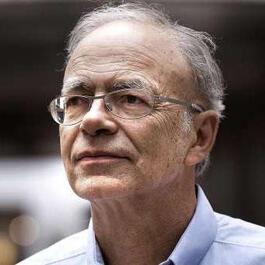

AIAP: On Becoming a Moral Realist with Peter Singer

Are there such things as moral facts? If so, how might we be able to access them? Peter Singer started his career as a preference utilitarian and a moral anti-realist, and then over time became a hedonic utilitarian and a moral realist. How does such a transition occur, and which positions are more defensible? How might objectivism in ethics affect AI alignment? What does this all mean for the future of AI? On Becoming a Moral Realist with Peter Singer is the sixth podcast in the AI Alignment series, hosted by Lucas Perry. For those of you that are new, this series will be covering and exploring the AI alignment problem across a large variety of domains, reflecting the fundamentally interdisciplinary nature of AI alignment. Broadly, we will be having discussions with technical and non-technical researchers across areas such as machine learning, AI safety, governance, coordination, ethics, philosophy, and psychology as they pertain to the project of creating beneficial AI. If this sounds interesting to you, we hope that you will join in the conversations by following us or subscribing to our podcasts on Youtube, SoundCloud, or your preferred podcast site/application. In this podcast, Lucas spoke with Peter Singer. Peter is a world-renowned moral philosopher known for his work on animal ethics, utilitarianism, global poverty, and altruism. He's a leading bioethicist, the founder of The Life You Can Save, and currently holds positions at both Princeton University and The University of Melbourne. Topics discussed in this episode include: -Peter's transition from moral anti-realism to moral realism -Why emotivism ultimately fails -Parallels between mathematical/logical truth and moral truth -Reason's role in accessing logical spaces, and its limits -Why Peter moved from preference utilitarianism to hedonic utilitarianism -How objectivity in ethics might affect AI alignment

From "Future of Life Institute Podcast"

Comments

Add comment Feedback