Machine Learning Street Talk (MLST)

Welcome! We engage in fascinating discussions with pre-eminent figures in the AI field. Our flagship show covers current affairs in AI, cognitive science, neuroscience and philosophy of mind with in-depth analysis. Our approach is unrivalled in terms of scope and rigour – we believe in intellectual diversity in AI, and we touch on all of the main ideas in the field with the hype surgically removed. MLST is run by Tim Scarfe, Ph.D (https://www.linkedin.com/in/ecsquizor/) and features regular appearances from MIT Doctor of Philosophy Keith Duggar (https://www.linkedin.com/in/dr-keith-duggar/).

Show episodes

César Hidalgo has spent years trying to answer a deceptively simple question: What is knowledge, and why is it so hard to move around? We all have this intuition that knowledge is just... information. Write it down in a book, upload it to GitHub, train an AI on it—done. But César argues that's completely wrong. Knowled

This is a lively, no-holds-barred debate about whether AI can truly be intelligent, conscious, or understand anything at all — and what happens when (or if) machines become smarter than us. Dr. Mike Israetel is a sports scientist, entrepreneur, and co-founder of RP Strength (a fitness company). He describes himself as

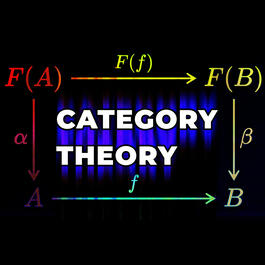

Making deep learning perform real algorithms with Category Theory (Andrew Dudzik, Petar Velichkovich, Taco Cohen, Bruno Gavranović, Paul Lessard)

We often think of Large Language Models (LLMs) as all-knowing, but as the team reveals, they still struggle with the logic of a second-grader. Why can’t ChatGPT reliably add large numbers? Why does it "hallucinate" the laws of physics? The answer lies in the architecture. This episode explores how *Category Theory* —an

Are AI Benchmarks Telling The Full Story? [SPONSORED] (Andrew Gordon and Nora Petrova - Prolific)

Is a car that wins a Formula 1 race the best choice for your morning commute? Probably not. In this sponsored deep dive with Prolific, we explore why the same logic applies to Artificial Intelligence. While models are currently shattering records on technical exams, they often fail the most important test of all: **the

What if everything we think we know about AI understanding is wrong? Is compression the key to intelligence? Or is there something more—a leap from memorization to true abstraction? In this fascinating conversation, we sit down with **Professor Yi Ma**—world-renowned expert in deep learning, IEEE/ACM Fellow, and author

Pedro Domingos, author of the bestselling book "The Master Algorithm," introduces his latest work: Tensor Logic - a new programming language he believes could become the fundamental language for artificial intelligence. Think of it like this: Physics found its language in calculus. Circuit design found its language in